YouTube has introduced new controls that allow advertisers and media agencies to prevent ads running against content that has excessive swearing, is sexually provocative and videos that are “sensational” and “shocking”.

In a 'Brand Care Playbook' document obtained by AdNews, YouTube has outlined how it intends to improve brand safety amid a global advertising boycott of its video sharing platform, after several cases where ads were being placed against undesirable content.

In Australia, Telstra, Holden, Vodafone and the government are among the organisations who have stopped advertising on YourTube until a solution is found.

More than 400 hours of new video content is uploaded on YouTube every minute, making the task of policing it incredibly challenging, even by the tech giant's standards.

While high profile examples have dominated media headlines in recent weeks, in reality a tiny fraction of the ads on YouTube are placed against dodgy material.

Recently, Google chief business officer Philipp Schindler said the volume of videos flagged is “1/1000th of a percent of advertisers total impressions”.

Upscaling enforcement

To help weed out undesirable videos, Google says it is “significantly increasing” its enforcement team that reviews suitable content on a daily basis.

Google has also established a 'rapid response program' that it says will respond to any questions or concerns about YouTube content within a few hours.

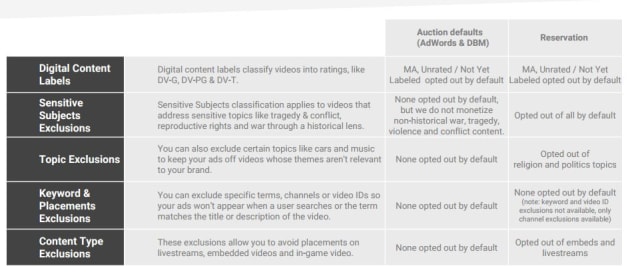

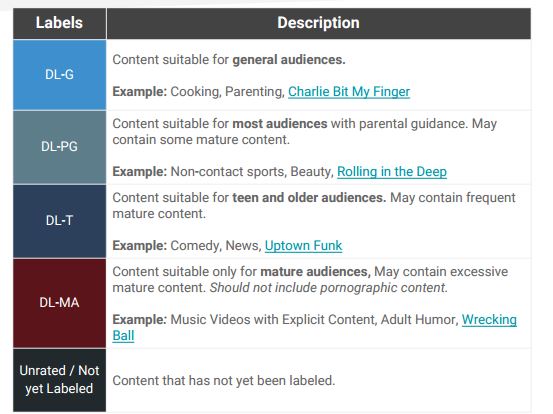

Advertisers and their agency partners will have greater controls over YouTube's brand care tools. These allow brands to prevent ads from running against videos by controlling the classification of videos that are acceptable.

It also allows advertisers to excluded videos by subject, topic and keywords as well as excluding certain types of videos, such as livestreams, embedded videos or in-game videos.

Sensitive subject exclusions

Previously, sensitive subject exclusions only covered social issues, such as discrimination, identity relations, scandals and investigations, reproductive rights, firearms and weapons. Videos about wars, disasters and accidents were also considered sensitive subjects.

The new exclusions expand the definition of 'sensitive' to include sexually lewd pictures and text, crude content designed to shock viewers and a heavy use of profane language.

Topic exclusions, such as religion or politics, cover 60 categories and 1,500 subcategories. Politics and religion are excluded by default for reservation buys.

Another change Google is making is to allow AdWords advertisers to manage and implement placement exclusions at the account level.

For Reservation and Google Preferred buyers, keyword and video exclusions are not available, only channel exclusions apply for reservation buys, which have already been highly vetted.

Many media agencies have tough controls and resources dedicated to vetting video content. GroupM and Omnicom have enlisted the services of video ratings vendors to provide more analysis on brand safe content on video sharing platforms.

Agencies often use white lists as a default to ensure that ads served on Google's preferred network only show up on websites they deem brand safe, while blacklists are used as an additional measure to ban any dodgy sites they come across.

Have something to say on this? Share your views in the comments section below. Or if you have a news story or tip-off, drop us a line at adnews@yaffa.com.au

Sign up to the AdNews newsletter, like us on Facebook or follow us on Twitter for breaking stories and campaigns throughout the day.