This article is the latest in the series of ad tech reviews. This time the spotlight was turned to Data Management Platforms (DMPs). Specifically, the subgroup of DMPs that verify the different types of audiences that have been exposed to a digital ad-campaign. For example: this technology verifies if an audience is mostly male or female, young or old, interested in sports or travel, etc...

Just like in previous tests, this review needed to be fair and transparent in order to understand the results. The goal of this test was not to prove that one DMP was "better" than another. Instead, intention behind the test was to get an understanding of the overall industry by looking at the results from multiple DMPs simultaneously.

This test used a single 300x250 creative from the "Kiss Goodbye to Multiple Sclerosis" awareness campaign. This campaign reached over 450,000 unique users, it was thousands of free dollars of media investment which was kindly donated by AppNexus and the ad-serving was given away by free by Sizmek. We are very grateful for their continued support and generosity. In total over 150 people clicked on the ads and went to the Multiple Sclerosis donation site.

“Smart marketers and agencies need to ask tough questions of their tech partners, and AppNexus supports GroupM’s efforts to spotlight areas of ad tech that benefit from greater scrutiny. We’re also very happy to support MS awareness along the way!” Dave Osborn, VP APAC for AppNexus.

Seven different audience DMPs were approached with a request to donate a tracking pixel for this campaign. Five of the seven DMPs agree to the test and the only two technologies that abstained were Google and Facebook.

To ensure that the test was a fair as possible (a) the test was independently administered by the great team at Sizmek; (b) the programmatic buying was run by the experts at GroupM Connect; (c) the programmatic media was split evenly over five premium media partners (Yahoo, Fairfax, MCN, Mi9 and News Digital Media) and (d) the results were independently analysed by Dr Nico Neumann from the University South Australia.

The results of the test were very interesting;

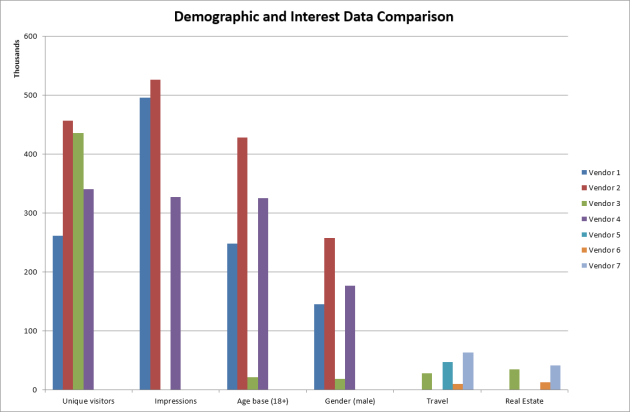

Image 1 - Overview of Key Audience Data Sets

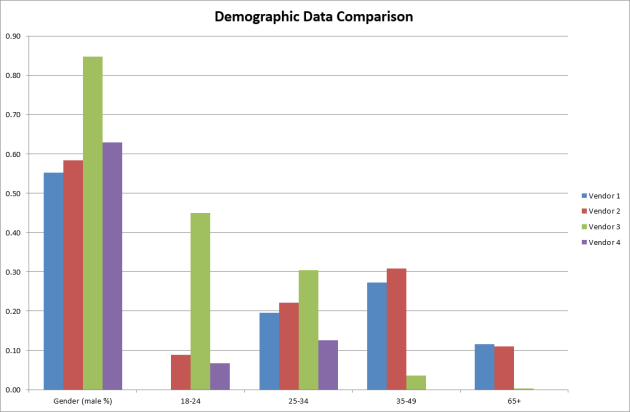

Image 2 - Overview of Demographic Audience Data Comparison

The results of this test were fascinating. I've tried to break down the results into a number of key themes;

1. Observation 1 - Get the Basics Right

It was shocking to see that some of these AdTech companies didn't have the basics. Counting (a) Impressions; (b) Unique Users and being able to display these results (c) in a daily breakdown and (d) in a self-service interface is an absolute must.

In summary: How can one trust a technology to give accurate sophisticated results if it can't measure the basics? Would you trust your General Practitioner if he / she didn't know how to take your blood pressure?

2. Observation 2 - Deterministic, not Probabilistic Data

The data-set that underpins vendor 1 and 4 are from a deterministic data-set. Deterministic data is whereby users login to an application and freely give private information about themselves. A good example of deterministic data is Facebook whereby users login and share your age and gender and there is a social system in place to ensure that that data is relatively accurate. Vendors 2, 3 and 5 had probabilistic data. Probabilistic data is whereby the data is "guesstimated" by looking at the browsing history of a user and guessing which category to which they belong.

In summary: To follow on from the analogy above: this would be like your GP guessing your blood pressure by looking at what food you purchased and put in your fridge over the last 28 days. Statistically speaking it may be "interesting" but it's hardly accurate.

3. Observation 3 - Data Quality Beats Quantity

One key data-set that is often used as a proxy for data quality is People 18-24. There was a 38 % discrepancy of this data-set across the group. One vendor said that the data had 7% of the people in this category and another said that there were 45% in this category.

In summary: To follow on from the analogy above: this would be like visiting two different GPs and one saying that you had high blood pressure and the other saying that you had low blood pressure. How could you trust either?

In conclusion: One upcoming milestone for the media industry is to be able to migrate media dollars from TV to online digital advertising. This migration will require each of the TV stations to be able to measure the audiences that watch their TV shows. Think about a scenario whereby each TV station uses (a) different data-sets to verify their audiences (b) different methodologies for determining this data and (c) no transparency in how these data-sets are created. It's important that we (as an industry) hold the digital / programmatic data-sets to a higher degree of accountability and scrutiny.

The next steps

1. Clear Data Origin - Ask your data provider if their data uses deterministic and / or probabilistic data. For example: Does the data come from people logging in and sharing their personal information or is this guessed by looking at their browsing history?

2. Data Classification Methodology - Ask your data provider for them to share their rules of classification: For example: why do they put a user in Segment X (e.g. male) instead of Segment Y (e.g. female)?

3. Data Validation Process - Ask your data provider to explain how they validate their data. For example: what principles are in place to test for accuracy of audiences?

In Closing: Asking these three simple questions could have a dramatic effect on the effectiveness and efficiency of your online digital campaigns.